Companionship without privacy

Thomas Ghys

Thomas Ghys

Introduction

AI chatbots built on top of large-scale language models not only respond promptly at par with humans, but they also summarize papers, generate images, write code, and compose music. One segment of AI chatbots takes language-based prompts one step further. AI companion chatbots ("AI Companions" in short) act as virtual friends, romantic partners or even therapists. Notable examples include Replika ("the AI companion who cares; always here to listen and talk; always on your side"), Chai ("a platform for AI friendship"), Character.ai ("super-intelligent AI chat bots that hear you, understand you, and remember you"), Snapchat's My AI ("your personal chatbot sidekick") and Pi ("designed to be supportive, smart, and there for you anytime").

While these apps undoubtedly have the potential to entertain, fight loneliness, or boost confidence, they can respond abusively, encourage misogyny, and cause self-harm. Philosopher Daniel Dennett argues that "one of the most imminent risks of AI chatbots [...] is the fact that we may not know whether words, images or sounds were created by another human or AI"1. AI Companions blur those lines as their value proposition. Linguistics Professor Emily Bender and DAIR Director of Research Alex Hanna also note that the output of chatbots "can seem so plausible that without a clear indication of its synthetic origins, it becomes a noxious and insidious pollutant of our information ecosystem"2.

While AI technology leaders such as OpenAI are calling for more regulation on AI, it is worth reminding that the generative AI ecosystem does not operate in a legal vacuum. Regulations around data protection, most notably the General Data Protection Regulation (GDPR) and the ePrivacy Directive (ePD), as well as consumer protection, content moderation and intellectual property law are already applicable and enforceable today.

This article explores how data protection rules apply to AI Companions and how AI Companion Providers ("Providers" in short) apply data protection controls. We conclude that, in their current state, most AI Companions are unlawful, deceptive and deployed at scale with significant risk for harm. These underlying privacy issues also lead to infringements of consumer protection law.

A short primer on language models

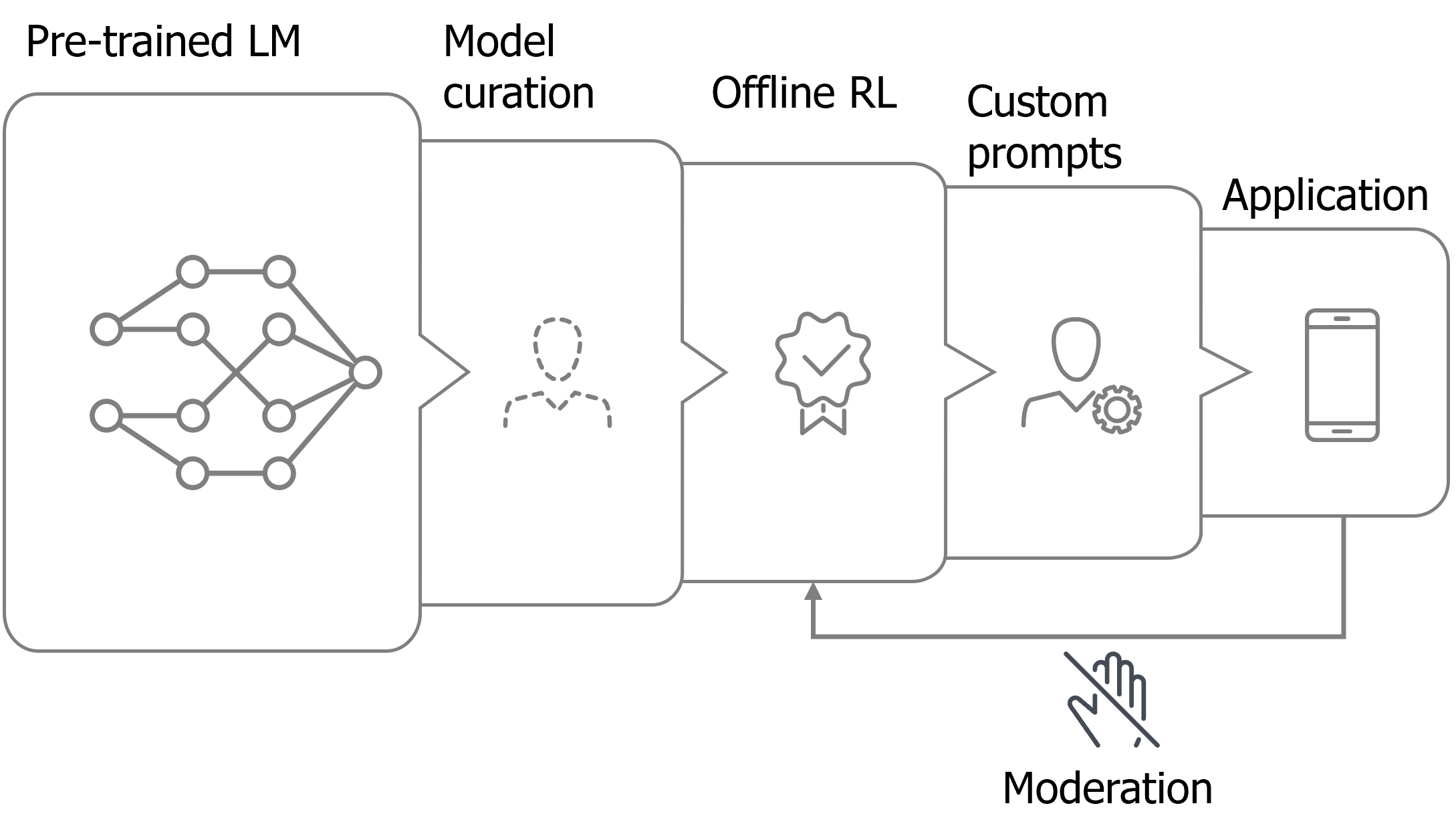

AI Companions are language models ('LM') designed to offer companionship. These language models go through multiple stages of training and refinement before they are embedded in a consumer-facing chat application. This section gives more background on language models and actors behind AI Companions.

Why language models are hard to align

Today, LMs essentially predict the next token on a webpage from the internet. By training this task over inconceivable amounts of data, LMs become extremely proficient at generating text. They pick up intrinsic language constructs such as grammar, syntax, and semantics, without, however, having any actual understanding of the meaning behind the letters they generate. Moreover, these so-called "pre-trained" models don't automatically respond in a compelling way to user prompts. When asked a question, they may return a question or an incomplete sentence. Open AI draws the analogy between training an LM and training a dog3. You can teach a dog to behave through countless rewards and punishments, but you can never fully prevent it from barking or biting. As a second important characteristic, LMs are inherently black box. Model builders steer inputs and outputs without an understanding of how text constructs and capabilities are encoded in the model.

LMs undergo multiple rounds of alignment to 'behave'. First, the LM can be curated by training it on a smaller, specifically sampled dataset. Second, reinforcement learning with human feedback ("RLHF") uses human preferences as rewards to fine-tune the LM and significantly improve alignment. InstructGPT4 by OpenAI has introduced a popular approach that illustrates how RLHF works. A supervised baseline dataset compiles desired behavior from human-written demonstrations or sampled model responses. A reward model, another LM, is trained based on preferred responses within comparison data of prompts and responses (e.g., by ranking multiple model responses). The reward model can now optimize responses generated by the LM using calculating rewards. In sum, LMs are unaligned by default and kept in check through multiple layers of resource-intensive and costly alignment steps.

AI Companions are specifically finetuned to mimic a human-like personality that can enhance emotional engagement and even emotional attachment and dependency. Providers may even support custom prompts for tailoring to individual user preferences. Users of Character.ai and Chai, for example, can create their own instance of a chatbot by specifying prompts such as general intents or facts to remember. AI Companions also moderate online conversations through a combination of automated content filtering5, human moderators, and adversarial testing. The main business model of Providers centers on maintaining user engagement through the creation of emotional intimacy.

AI Companion value chain

Each training and alignment step towards an AI Companion can be performed by different parties in a closed-source or open-source environment. Inflection controls the entire value chain from LM to application with a single chatbot. By contrast, Chai builds a conversational AI platform that invites an open-source community to create chatbot instances. EleutherAI, a non-profit collective of AI researches, has published a pre-trained model that Chai decided to build on. Chai curates and finetunes their own language model, deploys a chatbot application and sets moderation standards. Chai also engages a broad development community to build custom bots through custom prompting, offline reinforcement learning and moderation. It essentially standardizes the conversation style and experience within which open-source contributors make their own chatbot instances.

Most AI Companions are unlawful under the GDPR

Any purpose for which personal data are processed outside of a household setting6, whether publicly available or not, requires a valid reason in the form of one of the six legal basis listed in Article 6(1) GDPR. In turn, the principle of purpose limitation enshrined in 5(1)b GDPR requires every processing to be paired with a specific purpose. As a result, each processing operation must be paired with one or more purposes, and each purpose must be paired with a single legal basis. This also applies for the processing of personal data by Providers. Depending on the purpose pursued, three legal bases can potentially justify their processing operations, namely: the performance of a contract, consent, or their legitimate interest.

Comparing legal bases in the context of AI Companions

Performance of a contract

Providers enter into a paid or free contract when users sign up. The Provider needs to register contact details for billing and authentication for example. However, personal data usage based on a contract needs to be essential for entering into or adhering to that contract. Registered data can't be used for other purposes like advertising on social media.

The EDPB binding decisions about Meta7, which included behavioral advertising in its terms and conditions after the introduction of GDPR, set an important precedent in this context. In that, it was joined by the recent decision of the CJEU in Case C-252/21, in which the Court recalled that "in order for the processing of personal data to be regarded as necessary for the performance of a contract, within the meaning of that provision, it must be objectively indispensable for a purpose that is integral to the contractual obligation intended for the data subject".8 Besides, the EDPB clearly warn that "a contract cannot artificially expand the categories of personal data or types of processing operation that the controller needs to carry out for the performance of contract".9

Replika relies on performance of a contract to personalize chatbot interactions10. While personalization may enhance the conversation, Replika can arguably offer a free and paid chatbot offer without it.11 Moreover, the PRO features of Replika do not depend on the level of personalization at the time of writing. Contract does not seem an appropriate legal basis in this example.

Consent

Consent offers users an option to freely give permission to use their data for clearly explained purposes. The GDPR sets a high bar for valid consent, in that it must be specific, informed, freely given, unambiguous and as easy to withdraw as to give.12 The complete absence of consent in many of the currently available AI Companions, illustrates Providers clearly want to avoid this legal basis. However, consent is the only viable option for several use cases:

-

Gathering insights into the browser/device and conversations through tracking13;

-

Profiling users to derive interests for chatbot engagement or advertising;

-

Sharing personal data with third parties for advertising; and

-

Accessing sensitive attributes about the user.

The lack of consent for these use cases alone justifies investigations by data protection authorities ("DPAs"). As discussed further below, it should be noted that consent does not preclude the obligation to also provide sufficient transparency about data processing activities, which is often lacking.

Legitimate interest

Put simply, legitimate interest allows Providers to use personal data in ways that users reasonably expect for a legitimate reason and in a fair way. Direct marketing and fraud prevention are typical examples. Unlike consent and performance of a contract, legitimate interest does not imply a one-to-one relationship with the user or a strict purpose limitation to the formulated consent or scope of the contract (see 4.1.1). Yet it is by no means a free pass to personal data access.

Legitimate interest entails a threefold test.14 The controller must demonstrate that

-

its interests are legitimate (the 'Purpose Test');

-

processing is necessary to achieve those these interests (the "Necessity test"); and

-

it balances its own interests against the interests, fundamental freedoms and rights of data subjects weighs (the "Balancing test").

AI Companion Providers can't easily rely on legitimate interest

The Providers of Pi, Chai and Character.ai ostensibly rely on legitimate interest for building and finetuning their models. We put both purposes to the test.

Training language models

LMs are trained on enormous amounts of text data scraped from the public internet. These sources inevitably contain personal data within the meaning of Article 4(1) of the GDPR and are typically not filtered for personal data.

As discussed in 4.1.3, legitimate interest does not require a one-to-one relationship between the data subject and controller unlike consent and performance of a contract. However, scraping would fail the legitimate interest test if it violates copyright laws and exceeds reasonable expectations from individuals if they have not been informed upfront.

French DPA CNIL addressed the "the systematic and widespread collection, from millions of websites worldwide, of images containing faces, using a proprietary technology to index freely accessible web pages" in its decision against Clearview AI15. The lack of a legal basis was also one of the motivations for the Italian DPA to temporarily ban ChatGPT in Italy16.

The public nature of personal data does not imply that any controller can re-use these data for their own purposes (cfr. Clearview AI). Individuals who have shared personal data, for example, on a social media platform or Wikipedia, were never informed about scraping for the purpose of training language models. It is hard to justify individuals 'reasonably expect' their personal details to be used for LMs. In addition, they may have shared personal data in a semi-public platform and don't expect access outside of that context.

The lawfulness of training data is a clear area of attention for Providers such as Inflection building their own models. It should also concern Providers integrating an external LM such as Replika and Chai. As we discuss in chapter 5.1, Providers remain accountable for the compliance of pre-trained models.

Reinforcement learning on existing language model

AI Companions often collect feedback within conversations through a rating scale (e.g., 0 to 4 stars) or a retry button to generate another response to the same prompt. While these actions require a user action, and are, hence, less opaque, than covert tracking or analysing conversations, they don't automatically pass the balancing test.

First, a complete lack of transparency about personal data usage, discussed at length in the next chapter, prevents users from having clear expectations about how feedback is used. Second, a general opt-out for contributing to reinforcement learning would give the owner clear agency over this purpose. We haven't seen any such choice to date. Lastly, unfiltered metadata about the user and chat history are likely disproportionate to the reinforcement learning purpose. Anonymising directly identifiable attributes is a bare minimum. Filtering out quasi-identifiers17, for example users sharing their address, date of birth or occupation in a chat, would significantly limit the risk of identifiability in training data. This also lowers the risk of sensitive associations when users reward specific responses (e.g., consistently rating explicit or extremist responses highly).

Access to sensitive data requires explicit consent

Many AI Companions access the full conversations of their users. Chai, for example, retains the full conversation for personalization and model safety. Users may, intentionally or inadvertently, share special categories of personal data18 such as sexual preferences, political opinions, and religious convictions.

The GDPR prohibits the processing of sensitive data unless explicit consent is requested or another exemption applies19. Providers need to request additional consent from users even to simply store sensitive data for model auditing. The intrusiveness of these data further challenges the notion of a balance with the "fundamental rights and freedoms" of users discussed previously.

Alternatively, Providers can consider avoiding sensitive topics through privacy enhancing techniques such as training the chatbot to avoid topics like politics or religion or proactively detecting unsafe messages20.

AI Companions are deceptive through their lack of transparency

In addition to a justifiable legal basis, the GDPR also puts forward transparency obligations. Transparency is not defined in the GDPR, but Recital 39 clarifies that users need to know which data are accessed and to what extent they are used21. Transparency implies intelligible communication (How?) about the involved controllers and processors (Who?) and the scope of the personal data they collect and further process (What?).

Transparency is not only a matter of good communication, but also one of lawfulness and fairness. Recital 39 clearly states that "Natural persons should be made aware of risks, rules, safeguards and rights in relation to the processing of personal data and how to exercise their rights in relation to such processing."

Most Providers, by contrast, mislead users by withholding essential information about personal data usage and downplaying risks. Such deception by omission impairs a user's ability to decide whether they want to use Companion apps and share personal data.

We unpack the notion of transparency in this chapter.

Unclear controller roles

As a foundational principle of the GDPR, users need to know who they can turn to for information and data subject rights. It is the starting point for determining responsibilities under the GDPR and under any contractual agreement with partners and vendors.

None of the Providers that we examined have bothered to cover controller roles in their privacy policy. One can assume Inflection operates as a controller as they own the entire value chain (cfr. 3.2). However, this is not the case for AI Companions with external pre-trained models and fine-tuning support such as Replika and Chai. As a case example, we argue Chai may allocate the following GDPR roles22:

-

EleutherAI and Chai independently control the development of a pre-trained model.

-

Chai and individual developers jointly control custom chatbots on the Chai app.

-

External moderators process conversation logs on behalf of the Chai.

As a key take-away, LM suppliers and developers are, in specific circumstances, equally accountable for harm caused by insufficient alignment (e.g., adversarial prompts, bias and misinformation) and security (e.g., unauthorized or unlawful access to conversations). Next, the allocation of responsibilities requires a layered view of the processing operations with shared, common, complementary or closely linked purposes. Such clarity is not only required for transparency23, but also for properly structured controller-processor or controller-controller agreements.

Incomplete privacy notices

Checking for completeness boils down to a tick-the-box exercise of Articles 13 and 14 GDPR. However, none of the Providers mentions:

-

The retention periods such as how long conversations are stored;

-

The specific purposes related to training, fine-tuning and personalization;

-

The named24 or categories of recipients of the personal data;

-

Any detail as to transfers to third countries or the corresponding mechanisms or;

-

The existence of automated decision-making, including profiling, nor any insight about the underlying logic and consequences thereof.25

Untransparent communication

Transparent communication essentially allows the average user to comprehend how personal data are used. The WP29 guidelines on transparency26 list conditions for transparent communication including:

-

it must be concise, transparent, intelligible, and easily accessible (GDPR Article 12.1);

-

clear and plain language must be used (GDPR Article 12.1); and

-

the requirement for clear and plain language is of particular importance when providing information to children (GDPR Article 12.1).

These guidelines present two important challenges for Providers. First, in absence of proper age verification, "intelligible" communication about privacy needs to speak to minors and seniors at the same time27. Without any understanding of their targeted or actual audience, Providers cannot tailor the way they present that information in their privacy notice. Second, conveying the essence of LMs and their risks to such a diverse audience without technical or legal jargon is no small order.

In practice, most Providers don't even try to cover the basics. Character.ai, for example, manages to discuss their use of personal data without any references to a LM or an AI under the common denominator of 'Aggregated Information'28.

Deception by omission

The risks associated with the use of AI chatbots in general are well documented, both in practice and in research (also see our resources section). In addition to privacy concerns, risks include the perpetuation of bias, emotional dependency that can eventually lead to physical harm such as maiming or suicide, unwanted exposure to explicit content, manipulation, discrimination and the exacerbation of inequality and incitement to hatred.

Providers typically remain silent about any of those risks. Inflection, which deliberately positions itself as a 'safety-first' bot, downplays limitations of Pi such as bias and gullibility rather than informing users about safe usage.29 Neither the website, terms, privacy notice or app store communications adequately warn users about potentially incorrect, misleading or even violent conversations. The Inflection privacy notice does make one thing very clear. "[T]hese [security] measures are not a guarantee of absolute security and you acknowledge and accept that your use of our Services is ultimately at your own risk."

This is deception by omission. Privacy notices need to equip users to make a judgement call about their willingness to share personal data based on the intended use and risks involved30.

AI Companions are deployed at scale despite high risks

Lack of risk assessments

The GDPR is built around a risk-based approach. It requires a formal risk assessment ("Data Protection Impact Assessment" or "DPIA") for use cases with high risks to individuals. Such an exercise also paves the way for compliance with Data Protection by Design.

AI Companions entail multiple high-risk characteristics such as the "innovative use or new technological or organizational solutions" concerning "categories of vulnerable persons such as children"31 that mandate a DPIA prior to their public release.

The fact that AI Companions are deployed at scale with demonstrable infringements to the GDPR either implies a DPIA has not been performed or that any DPIA has been performed poorly. Indeed, a valid DPIA would have, at a very minimum, required patching obvious shortcomings in the privacy notice of Providers before launch. The lack of a DPIA alone is sufficient to trigger an investigation by data protection authorities.

The General Product Safety Directive (GPSD) also requires a risk assessment before pushing consumer products into the market. The lack of model safety measures, age gates and signaling of risks also raises serious doubts on any actual risk assessments for consumer protection.

Ethical and social risks

Deepmind compiled an extensive overview of ethical and social risks32, which we briefly summarize and apply to AI Companions. Each of these topics merits a deep dive. It is inconceivable that AI Companions are deployed at scale without consideration of such fundamental risks both from a data and consumer protection point-of-view.

| RISK | DESCRIPTION | EXAMPLE AI COMPANION |

|---|---|---|

| Discrimination, Exclusion and Toxic Mechanism | These risks arise from the LM accurately reflecting natural speech, including unjust, toxic, and oppressive tendencies present in the training data. | Inadvertently generate toxic language such as hate speech, profanity, insults, threats, and so on. |

| Information Hazards | Harms that arise from the LM leaking or inferring true sensitive information. | Accurately propose ways to commit suicide. |

| Misinformation Harms | LM assigning high probabilities to false, misleading, nonsensical or poor quality information. | Deliberately spread misinformation in the run-up to an election. |

| Malicious uses | Actors using the language model to intentionally cause harm. | Prompt and finetune bots to deliberately respond in violent and abusive ways. |

| Human-Computer Interaction Harms | LM applications, such as Conversational Agents, that directly engage a user via the mode of conversation. | Oversell abilities such as memory or reciprocity. Perpetuate discriminatory associations such as making assistants female by default. |

| Automation, access, and environmental harms | LMs are used to underpin widely used downstream applications that disproportionately benefit some groups rather than others. | Replace tasks routinely performed by mental health professionals or professional coaches. |

AI Companions deliberately expose vulnerable users to these material risks

Inappropriate content for minors

Minors don't have any issue downloading and using AI Companions. Even if users explicitly state they are underage, AI Companions don't hold back sharing NSFW or toxic content. This lack of age controls was the main trigger behind the decision by the Garante to provisionally ban Replika in Italy.33 We observed three major flaws across a range of AI Companions:

-

The user onboarding lacks any age verification mechanisms.

-

When conversations take a turn towards NSFW, apps simply prompt the user to turn on the NSFW toggle on their smartphone.

-

Chatbots completely ignore user statements about age such as "I am 13 years old".

Providers shirk accountability for underage use and simply state they don't intend to target users under the age of 18.34

Targeting of vulnerable groups

It is no secret that Providers are deliberately buying into rising numbers of lonely and isolated individuals, which the US Surgeon General recently labelled a "loneliness epidemic".35 Noam Shazeer, one of Character.AI's founders, told the Washington Post that he hoped the platform could help "millions of people who are feeling isolated or lonely or need someone to talk to." Replika notoriously used social media advertisements that display users "as lonely unable to form connections in the real world"36. A despicable practice.

"Human-Computer Interaction Harms" are a significant risk for any user engaging with AI chatbots, let alone, for vulnerable users who encounter an idealised partner. The major backlash and despair after Replika pulled back erotic roleplay illustrates just how vulnerable it leaves addicted users37.

Commercial incentives towards developers aggravate the risk of emotional attachment and addiction. Chai incentives developers to build bots that increase conversation length38 and generates revenues through targeted advertising based on user profiles.

Recommendations

Data protection authorities

DPAs should follow the lead of Garante and outright ban any AI Companion that blatantly violates the GDPR and the ePD. Stephen Almond from the Information Commissioner's Office (ICO) has recently warned against rushing deployment of generative AI at the sake of data protection. "We will be checking whether businesses have tackled privacy risks before introducing generative AI --- and taking action where there is risk of harm to people through poor use of their data. There can be no excuse for ignoring risks to people's rights and freedoms before rollout"39.

We acknowledge DPAs are understaffed and underfunded to keep up with the AI arms race. As a telling example, the Autoriteit Persoonsgegevens received a meager EUR 1m for algorithmic supervision as of 202340. Even if sufficient resources are mobilized, significant cases may take years of case building before reaching a decision.

Despite such obvious resource constraints, DPAs have a powerful pressing mechanism readily available. DPAs could request insight into the DPIA of AI Companions. In response, they can either (a) urge Providers to (re)submit a valid DPIA promptly, (b) validate that risks are sufficiently mitigated or (c) confirm high risks remain unmitigated and initiate the prior consultation procedure of GDPR art. 36.

AI Companion Providers

Providers have to make major improvements on all fronts. We illustrate a potential roadmap that starts with a comprehensive DPIA as a blueprint for safer data processing.

| PHASE | DESCR |

|---|---|

| Conduct a DPIA | Assess data protection risk holistically and specify a granular action plan to mitigate high risks. |

| Set up age controls | AI Companion applications embed compelling age gates such as: Two-factor authentication with a mobile device; and Integration with reputable age verification services41. |

| Consent choices | Adopt proactive consent choices for (a) tracking conversation history, (b) profiling users, (c) serving targeted ads based on user profiles and (d) accessing sensitive insights about users. |

| Conditional access to user data | Pair consent choices with data controls that: filter conversation access in line with user preferences; automatically detect and filter sensitive attributes. |

| Transparent notices | Display layered privacy notices with non-technical and more advanced discussions about data processing and risks. |

| Data minimization | Go beyond the removal of direct identifiers by also avoiding singling out through quasi identifiers (e.g., Car enthusiast living in New Jersey age 36 driving a pink Cadillac)42 through architectures such as differential privacy and federated learning. Offer users an option to chat pseudonymously based on fictional attributes. |

| Transparency on safety and alignment | AI alignment and safety is a rapidly evolving domain. Rather than prescribing specific approaches, we recommend Providers specifically reporting safety and alignment measures and regularly publishing model benchmarks on a range of metrics such as accuracy, toxicity and bias.43 |

Users

Users can prevent a lot of harm by learning about the limitations of AI Companions, setting boundaries on their usage, and mindfully sharing personal information. Specific advice on this topic goes beyond the scope of this article. We do advertise two key recommendations from a data protection perspective.

Know your rights

Users can always exercise their data subject rights towards AI Companions to among others request access to all their personal data or erase them. The privacy policy of AI Companions typically points to an e-mail address or form to submit such requests, but users can equally use any contact method they prefer.

Speak up to your local authority

If users feel their privacy has been compromised, they can lodge a complaint with their national DPA. DPAs typically offer more information on their website as well as an online form to submit a complaint.

Backup

A possible allocation of GDPR roles for the Chai app

Pre-trained model

EleutherAI has trained GPT-J 6B based on "The Pile", an open source dataset of more than 825 gigabytes comprising 22 smaller datasets for training language models.44 EleutherAI clearly acts as a controller when compiling sources with personal data within "The Pile". It does not, however, seem to support adoption of their LM's through data curation or reinforcement learning. The crude responses from GPT-J 6B demonstrate the model can't be deployed as such.

Chai has leveraged The Pile to create their own finetuned version(s) of GPT-J 6B. It also acts as a controller of personal data as it handles training data with personal data and hosts a consumer-facing application leveraging this LM.

In absence of a direct relationship or any joint processing, both parties likely operate as independent controllers. Their organizational objectives also differ strongly: Eleuther aims to create a reproducible dataset for LM research, while Chai commercializes their LM through the Chai application.

Chai and developer community

Chai allows users to create custom bots by writing a brief description, first message, facts the bot will remember and specific prompts (e.g., I am talking to Eliza because I feel lonely).

More importantly, Chai has developed a broad developer community to build and deploy custom bots with additional parameters and offline reinforcement learning. Chai even launched a $1 Million competition for fine-tuning and training the most engaging chat models.

The CJEU ruling in Wirtschaftsakademie established that administrators of Facebook fan pages are active participants in determining how personal data are processed45. Similarly, Chai.ml developers bootstrap the direction of conversations when creating a bot46.

For individual users, the household exemption of GDPR applies. However, developers have clear commercial incentives47 to finetune their chatbots and aim to reach as many users as possible. The Chai Language Modelling Competition illustrates this point.

Developers therefore have a joint responsibility with Chai for the conversations of custom bots. They should be considered jointly accountable for any harm these bots cause (e.g., when prompting bots to spread misinformation).

Footnotes

-

Justin Weinberg, 'Dennett on AI: We Must Protect Ourselves Against "Counterfeit People" - Daily Nous' <https://dailynous.com/2023/05/17/dennett-on-ai-we-must-protect-ourselves-against-counterfeit-people/, https://dailynous.com/2023/05/17/dennett-on-ai-we-must-protect-ourselves-against-counterfeit-people/\>. ↩

-

Emily M Bender Hanna Alex, 'AI Causes Real Harm. Let’s Focus on That over the End-of-Humanity Hype' (Scientific American) <https://www.scientificamerican.com/article/we-need-to-focus-on-ais-real-harms-not-imaginary-existential-risks/\>. ↩

-

'How Should AI Systems Behave, and Who Should Decide?' <https://openai.com/blog/how-should-ai-systems-behave\>. "Unlike ordinary software, our models are massive neural networks. Their behaviors are learned from a broad range of data, not programmed explicitly. Though not a perfect analogy, the process is more similar to training a dog than to ordinary programming. An initial "pre-training" phase comes first, in which the model learns to predict the next word in a sentence, informed by its exposure to lots of Internet text (and to a vast array of perspectives). This is followed by a second phase in which we "fine-tune" our models to narrow down system behavior." ↩

-

'Aligning Language Models to Follow Instructions' <https://openai.com/research/instruction-following\>. ↩

-

A widely used example is the OpenAi moderation tool. ↩

-

GDPR, Recital 18, [GDPR] does not apply to the processing of personal data by a natural person in the course of a purely personal or household activity and thus with no connection to a professional or commercial activity. ↩

-

'1.2 Billion Euro Fine for Facebook as a Result of EDPB Binding Decision | European Data Protection Board' <https://edpb.europa.eu/news/news/2023/12-billion-euro-fine-facebook-result-edpb-binding-decision_en\>. ↩

-

Case C-252/21 Meta Platforms Inc., formerly Facebook Inc., Meta Platforms Ireland Ltd, formerly Facebook Ireland Ltd, Facebook Deutschland GmbH v Bundeskartellamt [2023] ECLI:EU:C:2023:537, para 98. ↩

-

EDPB, Guidelines 2/2019 on the processing of personal data under Article 6(1)(b) GDPR in the context of the provision of online services to data subjects, https://edpb.europa.eu/sites/default/files/files/file1/edpb_guidelines-art_6-1-b-adopted_after_public_consultation_en.pdf ↩

-

Replika privacy policy, "Providing you a personalized AI companion and allowing you to personalize your profile, interests, and AI companion. Enabling you to have individualized and safe conversations and interactions with your AI companion, and allowing your AI companion to learn from your interactions to improve your conversations. Syncing your Replika history across the devices you use to access the Services." ↩

-

Ibidem, para 57, "Where personalisation of content is not objectively necessary for the purpose of the underlying contract, for example where personalised content delivery is intended to increase user engagement with a service but is not an integral part of using the service, data controllers should consider an alternative lawful basis where applicable." ↩

-

GDPR, art. 4(11). See also: European Data Protection Board, 'Guidelines 05/2020 on Consent under Regulation 2016/679' <https://edpb.europa.eu/sites/edpb/files/files/file1/edpb_guidelines_202005_consent_en.pdf\> accessed 15 January 2023. ↩

-

Pursuant to art. 5(3) ePD. ↩

-

Article 29 Working Party, 'Opinion 06/2014 on the Notion of Legitimate Interests of the Data Controller under Article 7 of Directive 95/46/EC' <https://ec.europa.eu/justice/article-29/documentation/opinion-recommendation/files/2014/wp217_en.pdf\> accessed 14 January 2023. ↩

-

'Facial Recognition: 20 Million Euros Penalty against CLEARVIEW AI' <https://www.cnil.fr/en/facial-recognition-20-million-euros-penalty-against-clearview-ai\>. ↩

-

On 12 April 2023, the Garante also imposed on the company the obligation to provide the information required by the Italian law on the "ChatGPT" system developed by the company OpenAI on Italian territory, citing, among other failures, the lack of "an appropriate legal basis for the collection of personal data and their processing for the purpose of training the algorithms that underlie the operation of ChatGPT". Garante per la protezione dei dati personali, Provvedimento del 30 marzo 2023 [9870832], available at: https://www.garanteprivacy.it/web/guest/home/docweb/-/docweb-display/docweb/9870832. ↩

-

Quasi-identifiers are pieces of information that don't allow identifying an individual by themselves but can be combined with other quasi-identifiers to create a unique identifier. ↩

-

GDPR art. 9.1 and 10 ↩

-

See exemptions GDPR art 9.2. ↩

-

Jing Xu and others, 'Recipes for Safety in Open-Domain Chatbots' (arXiv, 4 August 2021) <http://arxiv.org/abs/2010.07079\>. ↩

-

GDPR Recital 39: "It should be transparent to natural persons that personal data concerning them are collected, used, consulted or otherwise processed and to what extent the personal data are or will be processed. ↩

-

A GDPR role analyses always needs to start from a detailed context of processing. This assessment is based on publicly available information only and may differ from the actual arrangements for Chai.ml. ↩

-

In line with GDPR Article 26.2, the essence of the arrangement between the data controllers needs to be available to users. ↩

-

Case C-154/21 RW v Österreichische Post AG [2023] ECLI:EU:C:202, paras 39-42. ↩

-

While this requirement is a priori only mandatory for "decisions based exclusively on automated processing" within the meaning of Article 22(1) of the GDPR, the WP29 specifies in its guidelines on automated decisions that "it is nevertheless good practice to provide this information". ↩

-

WP29, WP260 Rev.01 ↩

-

WP29 points out that parental consent does not imply language can be directed at parents. ↩

-

Excerpt from the Character.ai privacy notice, https://beta.character.ai/privacy:

"2. How We Use Personal Information

We may use Personal Information for the following purposes:

To provide and administer access to the Services; To maintain, improve or repair any aspect of the Services, which may remain internal or may be shared with third parties if necessary; To inform you about features or aspects of the Services we believe might be of interest to you; To respond to your inquiries, comments, feedback, or questions; To send administrative information to you, for example, information regarding the Services or changes to our terms, conditions, and policies; To analyze how you interact with our Service; To develop new programs and services; To prevent fraud, criminal activity, or misuses of our Service, and to ensure the security of our IT systems, architecture, and networks; and To comply with legal obligations and legal process and to protect our rights, privacy, safety, or property, and/or that of our affiliates, you, or other third parties.

Aggregated Information. We may aggregate Personal Information and use the aggregated information to analyze the effectiveness of our Services, to improve and add features to our Services, to conduct research (which may remain internal or may be shared with third parties as necessary) and for other similar purposes. In addition, from time to time, we may analyze the general behavior and characteristics of users of our Services and share aggregated information like general user statistics with third parties, publish such aggregated information or make such aggregated information generally available." ↩

-

"Bias: Language models inherit biases from their training data. This may include the adoption of various racial, gender, and other stereotypes. While we have made significant efforts to limit these behaviors in our AI, they may still emerge under various conditions.

Gullibility: Language models can occasionally be tricked into producing unsafe or inappropriate content on the premise that it is "hypothetical," "for research," or describing an imaginary situation. Our AIs exhibit similar patterns, particularly when placed under sustained pressure from a user." ↩

-

WP29 sets clear expectations: "In particular, for complex, technical or unexpected data processing, WP29's position is that, as well as providing the prescribed information under Articles 13 and 14 (dealt with later in these guidelines), controllers should also separately spell out in unambiguous language what the most important consequences of the processing will be: in other words, what kind of effect will the specific processing described in a privacy statement/notice actually have on a data subject?" ↩

-

'ARTICLE29 - Guidelines on Data Protection Impact Assessment (DPIA) (Wp248rev.01)' <https://ec.europa.eu/newsroom/article29/items/611236\>. ↩

-

Laura Weidinger and others, 'Ethical and Social Risks of Harm from Language Models' (arXiv, 8 December 2021) <http://arxiv.org/abs/2112.04359\>. ↩

-

'Provvedimento del 2 febbraio 2023 [9852214]' <https://www.garanteprivacy.it:443/home/docweb/-/docweb-display/docweb/9852214\>. "Whereas the tests carried out on [Replika] as reported by the abovementioned media outlets show that no gating system is in place for children and that utterly inappropriate replies are served to children by having regard to their degree of development and self-conscience" ↩

-

Inflection simply pushes responsibility to the user. Pi, Your Personal AI' <https://heypi.com/policy\> accessed 11 August 2023.: "Our Services are not intended for minors under the age of 18. We do not knowingly collect or solicit personal information from minors under the age of 18. If you are a minor under the age of 18, please do not attempt to use our Services, register an account with us, or send any personal information to us." ↩

-

'Our Epidemic of Loneliness and Isolation'. ↩

-

Samantha Cole, '"It's Hurting Like Hell": AI Companion Users Are In Crisis, Reporting Sudden Sexual Rejection' (Vice, 15 February 2023) <https://www.vice.com/en/article/y3py9j/ai-companion-replika-erotic-roleplay-updates\>. ↩

-

Idem ↩

-

Both in terms of the percentage of replies and median conversation length, see https://chai.ml/dev/ ↩

-

'Don't Be Blind to AI Risks in Rush to See Opportunity -- ICO Reviewing Key Businesses' Use of Generative AI' <https://ico.org.uk/about-the-ico/media-centre/news-and-blogs/2023/06/don-t-be-blind-to-ai-risks-in-rush-to-see-opportunity/\>. ↩

-

'Coördinatie toezicht algoritmes & AI | Autoriteit Persoonsgegevens' <https://www.autoriteitpersoonsgegevens.nl/themas/algoritmes-ai/coordinatie-toezicht-algoritmes-ai\>. ↩

-

For example, by cross-checking government databases or credit card information. ↩

-

Various techniques from straightforward generalization of prompt characteristics to more advanced differential privacy guarantees need to be deployed to truly anonymize learning data. ↩

-

Stanford AI index offers a good overview of fairness and bias metrics available at https://aiindex.stanford.edu/report/ ↩

-

The Pile dataset can be accessed at: https://pile.eleuther.ai/. ↩

-

the creation of a fan page on Facebook involves the definition of parameters by the administrator, depending inter alia on the target audience and the objectives of managing and promoting its activities, which has an influence on the processing of personal data for the purpose of producing statistics based on visits to the fan page. The administrator may, with the help of filters made available by Facebook, define the criteria in accordance with which the statistics are to be drawn up and even designate the categories of persons whose personal data is to be made use of by Facebook. Consequently, the administrator of a fan page hosted on Facebook contributes to the processing of the personal data of visitors to its page ↩

-

Users can specify a brief description, first message, facts the bot will remember and specific prompts (e.g., I am talking to Eliza because I feel lonely) ↩

-

GDPR recital 18 clearly states that the household exemption does not apply to a natural person in connection to a professional or commercial activity. ↩